I often get the question, "why does it matter where COVID-19 came from?"

I can see two main reasons. One is we need to know to prevent future pandemics. If SARS-CoV-2 jumped from a bat to an intermediate host to a human, then we want to know everything we can about that process

I can see two main reasons. One is we need to know to prevent future pandemics. If SARS-CoV-2 jumped from a bat to an intermediate host to a human, then we want to know everything we can about that process

Spillovers don't happen randomly. Humans and specific kind of animals, usually mammals or birds, come into contact in ways just right for a virus to be able to jump from one species to another.

It's common for viruses to jump from animals to humans temporarily, but usually when they do they aren't good at transmitting from human to human. We need to understand the circumstances where viruses successfully evolve human to human transmission.

In the case of SARS-CoV-2, there are a lot of questions. Were miners / farmers / others regularly exposed to relatives of the virus in Yunnan? How much of the population before 2020 tested positive for antibodies to SARS-like coronaviruses?

What kinds of animals might SARS-CoV-2 be able to infect? Cats and mink can be infected & probably transmit among themselves; are there other animals for which this is true?

How likely is it that SARS-CoV-2 was spreading person to person before Wuhan? How would we be able to detect that, now and in the future? Should countries make agreements with each other to share data about emerging pandemics?

How likely is SARS-CoV-2 to be directly engineered? Passaged through lab animals or tissue culture? How would we know? Are there or can there be safety procedures in place that would prevent gain of function research from being done without an audit trail?

How can China, the US, and the rest of the world cooperate on the investigation of pandemic origins given their respective political incentives? Or if not now, how could systems be set up to incentivize them / enable them to cooperate in the future?

I've observed in the nuclear security world that political leaders often want to deescalate and avoid the risk of an extremely costly war to both sides but feel limited in their ability to cooperate by their political incentives, specifically their base wanting them to look tough

This brings me to the second reason I think figuring out the origins of the COVID-19 pandemic is important. We, as a global civilization, need to figure out a *process* for investigating big potential and actual catastrophes and a method for preventing these

Basically, we lack robust methods of collaboration around issues important to every country. We need systems set in place during "peacetime" that will be robust to tenser political environments during and after a crisis.

The work to understand how COVID-19 happened is practice for future work where the stakes may be even higher. For example, largescale geoengineering projects would require a much greater capacity to reach consensus on difficult scientific questions

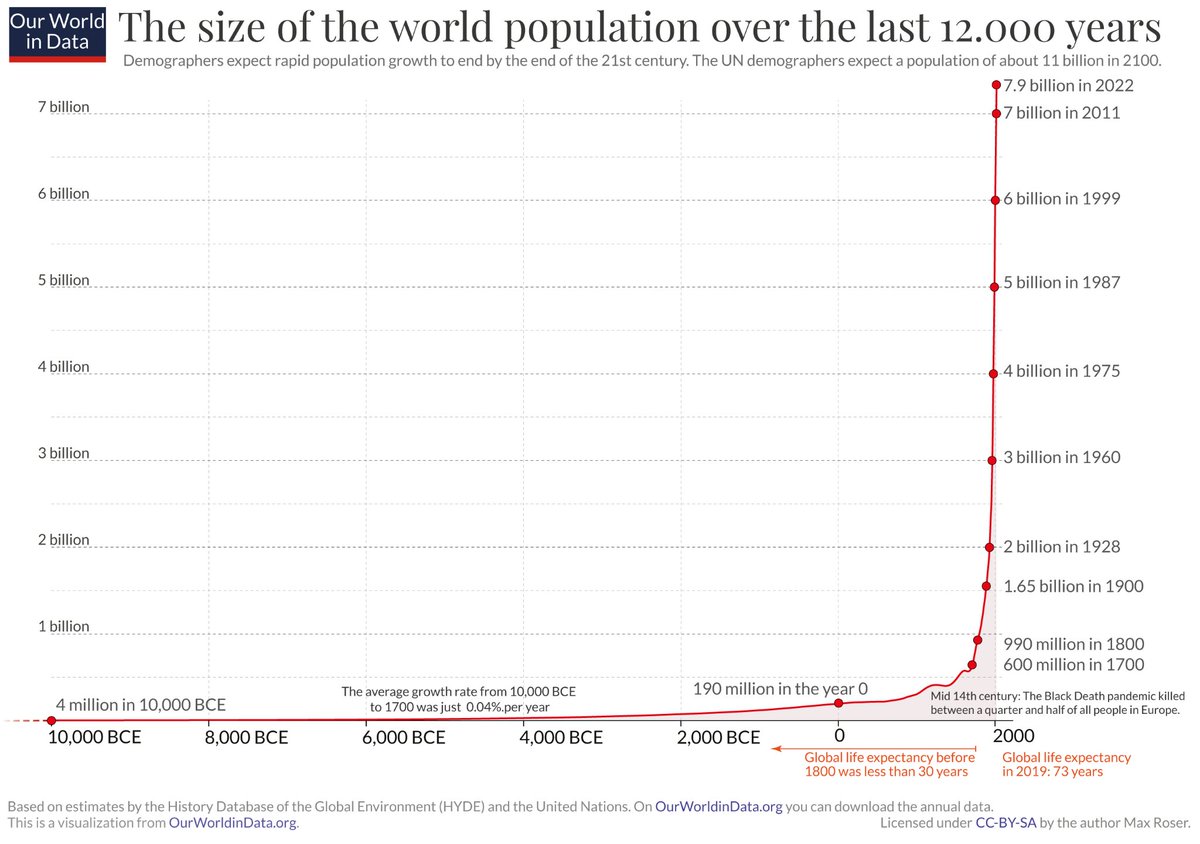

As the population grows and technology improves, we can do a lot more. Our power level is improving, but we cannot anticipate the effects of the our power increasing...

For example, if you stepped in a time machine and went to 2018 with the genome of SARS-CoV-2... No one would be able to predict what the virus would do if it were to spread among the human population without actually releasing

You might try to use animal models to study its transmissibility, and separately use different animal models to study the disease severity, but both would be difficult and the answers would not be precise. This is true regardless of whether the virus was natural or engineered.

Human populations are essentially ecosystems for pathogens, and we lack the modeling ability to understand much at all about how a new species will fare in an ecosystem. This is dangerous when paired with organisms that might have unusually good fitness.

This is both metaphorically and literally true. As a metaphor, a species is a new technology and the ecosystems are human societies. Introduce algorithmically mediated social media and you can't predict what will happen, either its rate of growth nor first & second order effects

And it's literally true of new organisms we might create, whether this is a novel virus or a new kind of broccoli. This isn't to say we have *no* predictive power when it comes to the effects of something very new. A new broccoli is unlikely to damage its environment.

The the more powerful / fit a new species / technology is, the harder it will be to determine the effects of that species / technology.

• • •

Missing some Tweet in this thread? You can try to

force a refresh