This article is more than 1 year old

Google admits Kubernetes container tech is so complex, it's had to roll out an Autopilot feature to do it all for you

More expensive, less flexible, but easier and safer to use

Google has recognised that users struggle to configure Kubernetes correctly and introduced a new Autopilot service in an attempt to simplify deployment and management.

Two things everyone knows about Kubernetes are: first, that it has won in the critically important container orchestration space, and second, that its complexity is both a barrier to adoption and a common cause of errors.

Even Google, the inventor and biggest promoter of Kubernetes, admits this is the case. "Despite 6 years of progress, Kubernetes is still incredibly complex," said Drew Bradstock, product lead for Google Kubernetes Engine (GKE). "What we've seen in the past year or so is a lot of enterprises are embracing Kubernetes, but then they run headlong into the difficulty."

GKE is a Kubernetes platform that runs primarily on Google Cloud Platform (GCP), but also on other clouds or on-premises, where it is part of Anthos.

This is the rationale for Autopilot, which is a fully managed deployment to GKE, provided it is running on GCP. But is GKE not already a managed service? It is – the difference is that Autopilot is both more opinionated and more automated than plain GKE.

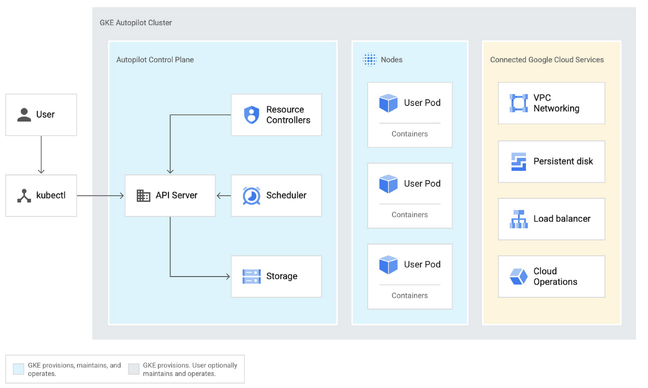

Kubernetes has the concept of clusters (a set of physical or virtual servers), nodes (individual servers), pods (a management unit representing one or more containers on a node), and containers themselves. GKE is fully managed to the cluster level. Autopilot extends that to nodes and pods.

The best place to look in order to understand Autopilot's features and limitations is here, taking careful note of the options marked "pre-configured", which means they cannot be changed.

In essence it is another way to purchase and manage GKE resources that gives less flexibility but more convenience. Since Google manages more of the configuration, it offers a higher SLA of 99.9 per cent uptime for Autopilot pods in multiple zones.

On Google's cloud, regions are composed of three or more zones. Putting all resources in a single zone is less resilient than spreading them over multiple zones, while extending failover to multiple regions maximizes resilience. Autopilot clusters are always regional rather than zonal, which is good for resilience but higher cost.

Other Autopilot constraints are that the operating system is always Google's own "container optimized" Linux with Containerd, not Linux with Docker, nor Windows Server. The maximum number of pods per node is 32, as opposed to 110 on standard GKE.

There is no SSH access to nodes; Autopilot nodes are locked down. GPU and TPU (Tensor Processing Unit) support is not available in Autopilot, though it is planned for the future. "Removing SSH was a big deal," Bradstock said. It is somewhat limiting, but Bradstock told us that it is based on research "where people had misconfigured things, with the best intentions."

Money, money

The pricing model is different too, being based on the CPU, memory, and storage used by pods, rather than on compute engine instances (virtual machines) used. There is also a fee of $0.10 per hour for each Autopilot cluster. GKE standard also has a $0.10 per hour fee for each cluster; you pay one but not both.

The obvious question of whether Autopilot or GKE standard is more expensive is not easy to answer. Since it is a somewhat premium deployment, Autopilot will cost more than a carefully optimised GKE standard deployment. "There is a premium over regular GKE," said Bradstock, "because we've got full SRE (Site Reliability Engineering) support and SLA support, it's not just the functionality."

That said, a GKE standard deployment which is under-utilised because it is hard to estimate the correct specification for the compute instances could cost more than Autopilot.

Why not just use Cloud Run, which allows container workloads to be deployed and run without any configuration of clusters, nodes, and pods, even though it still runs on GKE? "Cloud Run is a great opinionated developer environment, one app can spin up from zero to 1,000 and back to zero, that's where Cloud Run is targeted," Bradstock told us. "Autopilot is for people who want to do less work but they still want Kubernetes, they want to be able to see everything, they want to use third-party scripts, they want to build their platform."

Is compatibility with add-ons an issue with Autopilot's constrained environment? "There are things that don't work on day one," said Bradstock, though some third-party tools do work such as Datadog monitoring are already supported, and DaemonSets, a Kubernetes feature for running a service on every node, used by many add-ons.

The configuration of storage, compute, and the network means "we have to give up some level of flexibility and some connections," he told us. "But we definitely want the third-party ecosystem to run on it."

The Autopilot feature means that Google offers a wider range of Kubernetes options, from most to least hands-on. The trade-off is not only higher cost and less flexibility, but also potential deskilling of enterprise admins, though the argument is that businesses should focus on what provides business value rather than requirements that can be fulfilled by a third party.

Google's engineering has a better reputation than its customer support. Software engineer Kevin Lin, who admittedly is ex-Amazon, wrote recently of his experience as a new customer for AWS versus Google.

Google was slower, less helpful, and ended up referring him to a third-party partner, he said. "The initial onboarding call was entirely about how much money I was planning on spending with Google (as opposed to the Amazon call where they wanted to help me architect my service). Google Cloud has really nice ergonomics and world-class engineers but an awful reputation for customer support. My anecdotal experience seems to support this," he said.

Proof, if any were needed, that good engineering is not the only thing that matters for GCP to increase its cloud market share. ®