Facebook will no longer recommend health groups

Groups have been a major source of misinformation on the platform.

Facebook says that groups dedicated to health-related topics are no longer eligible to appear in recommendations. The update is part of the company’s latest effort to fight misinformation.

“To prioritize connecting people with accurate health information, we are starting to no longer show health groups in recommendations,” Facebook writes in a statement. Facebook notes that users will still be able to search for these groups and invite others to join them, but that these groups will no longer appear in suggestions.

Facebook groups, especially ones that dabble in health-related topics, have long been problematic for the company. Groups dedicated to anti-vaccine conspiracy theories, for example, have also been linked to QAnon and COVID-19 disinformation — often by the company’s own algorithmic suggestions. Mark Zuckerberg recently said the company won’t take down anti-vaccine posts the way it does with COVID-19 misinformation.

Speaking of QAnon, Facebook says it’s taking an additional step to keep groups associated with the conspiracy theory from spreading by “reducing their content in News Feed.” The company previously removed hundreds of groups associated with the movement, but hasn’t rooted out its presence entirely.

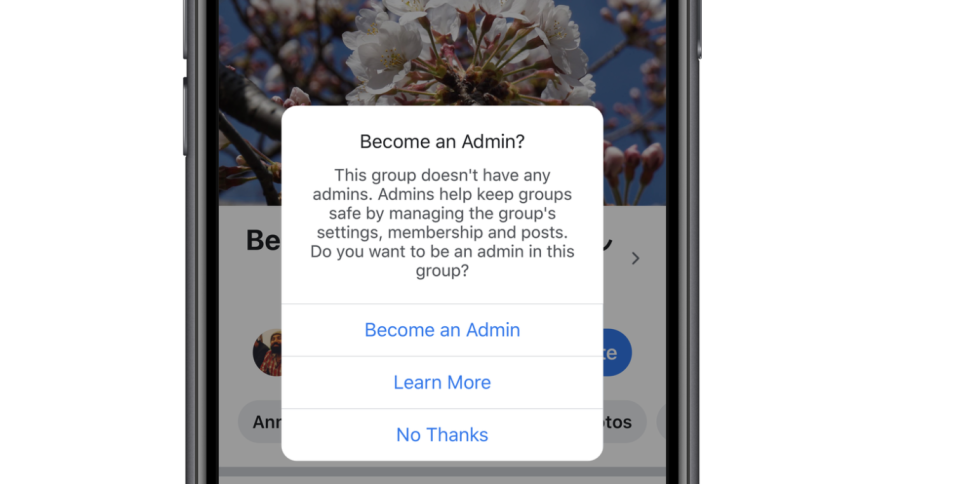

Finally, Facebook will now archive groups that no longer have an active admin. “In the coming weeks, we’ll begin archiving groups that have been without an admin for some time,” Facebook writes. In the future, the company will recommend admin roles to members of groups without one before archiving.

Facebook notes that it penalizes groups that repeatedly share false claims that are debunked by its fact checkers and that it’s removed more than a million groups in the last year for repeat offenses or otherwise breaking its rules.

But critics have long said that Facebook doesn’t do enough to police groups on its platform, which have been linked to disinformation, harassment and threats of violence. The company came under fire last month after it failed to remove a Wisconsin militia group that organized an armed response to protests in Kenosha until the day after a deadly shooting. And a number of Facebook groups have been credited with hampering the emergency response to devastating wildfires in Oregon after spreading baseless conspiracy theories about how the fires were started. Facebook eventually began removing these claims after emergency responders begged people to stop sharing the rumors.