Technology is reshaping every aspect of our lives. Once a week in The Future of series, we examine innovations in important fields, from farming to transportation, and what they will mean in the years and decades to come.

A decade ago, Google’s ambitions seemed unchecked: The company would design self-piloting cars through Waymo, sponsor moonbases, and even conquer death. One of the company’s plans: Smart contact lenses to measure the glucose level of your tears — and perhaps help reduce the damage caused by diabetes. “It’s still early days for this technology, but we’ve completed multiple clinical research studies, which are helping to refine our prototype,” wrote Google’s Brian Otis and Babak Parviz back in 2014.

Seven years later, the company’s ego remains just as inflated, but Verily’s smart contact lenses are nowhere to be seen; the side project of Google parent Alphabet was officially abandoned in 2018. Yet smart lenses are finally becoming a reality, thanks to the efforts of countless scientists and engineers. And the future of this intriguing technology is nothing like what you might expect.

Today (-ish): Long a dream, smart contacts are here

There have been many efforts to advance contact lenses, of course. Acuvue sells Oasys with Transitions lenses that automatically darken in sunlight, like tiny sunglasses for your pupils, and researchers have been working for years on smart lenses that zoom on demand, measure chemical levels in your body, and administer drugs (notably antihistamines). But smart ones? They’ve never really made it to market.

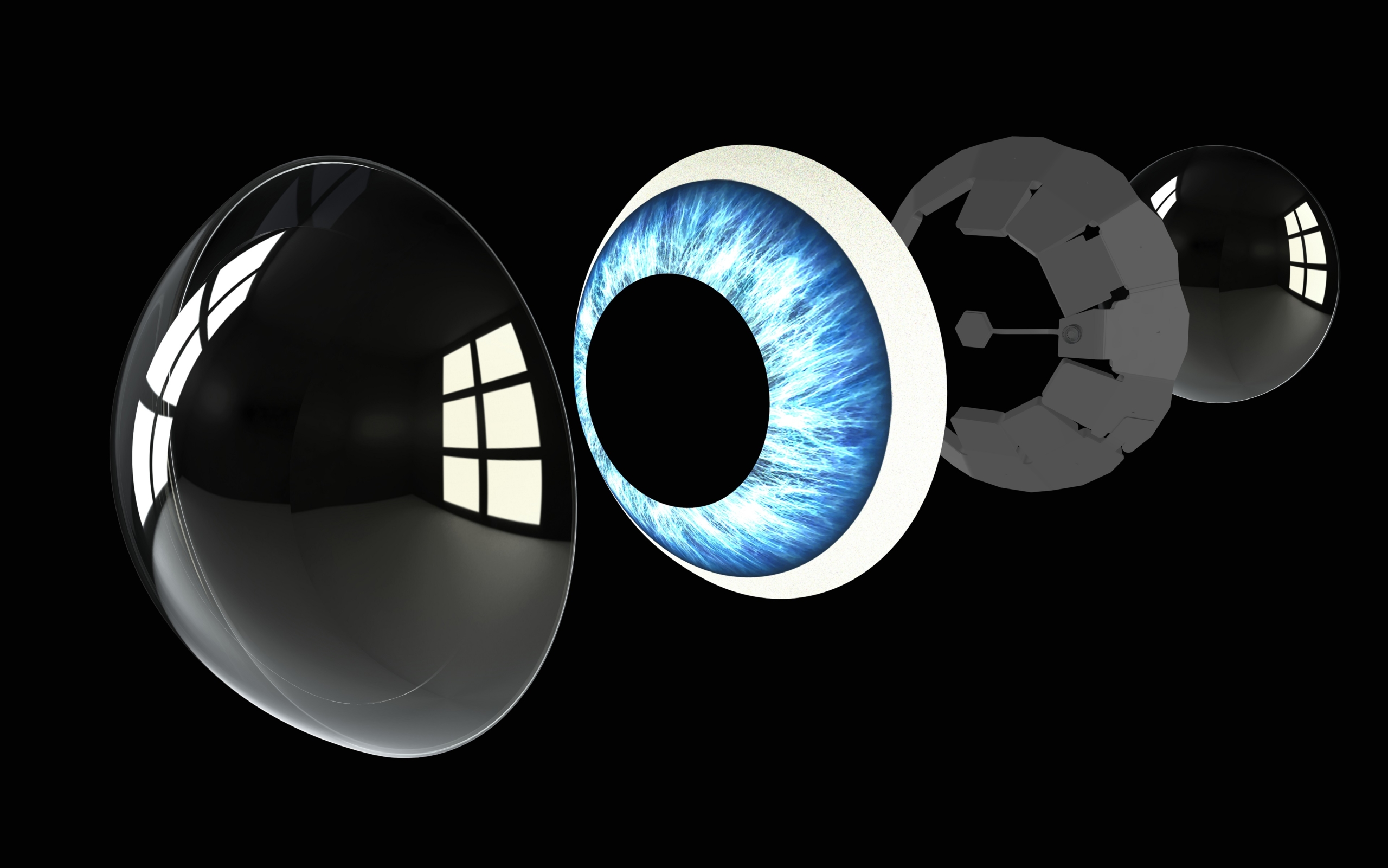

InWith Corp. is about to change that. At CES 2021, the company unveiled a method to place augmented vision display chips into the soft hydrogel contact lenses that millions of people wear daily. Smart contact lenses! In early 2020, the company announced a partnership with Bausch + Lomb, showing flexible electronic circuitry embedded directly into lenses. No, you can’t buy ’em yet. But they’re clearly almost here — and not just from InWith.

“It’s closer than you’d think, but it’s not tomorrow,” Steve Sinclair, senior vice president of product at Mojo Vision, told me. Mojo’s the big competitor to InWith, and has been secretly engineering lenses embedded with an enormous array of proprietary technology, including a nearly invisible Micro LED display under half a millimeter in size (think a grain of sand), tiny inertial sensors such as accelerometers and gyroscopes, a super-efficient image sensor to gauge the world around you, adorably tiny batteries, and more.

Like InWith’s lenses, Mojo’s are “just around the corner,” Sinclair told me. About a dozen people in his company have worn the latest prototypes, and a new model this summer promises even more advancements. What can you do with them? Augmented reality applications probably leap to your mind, at least they do for me: Direction overlays that guide you through unfamiliar city streets, information about the people and buildings you pass by, and so on. But the power of a display in your eye is nothing like what you might expect. Sinclair offers different use cases, things that give you your mojo back (hence the name of the company): The text of a big speech, notes for a presentation, a checklist for a major repair project, and so on.

One area that will be important is performance athletics: Today’s runners have a world of metrics on their wrists, but who wants to navigate a menu while sprinting at top speed? Imagine the power of biometric data directly in your field of view.

And as for AR? Eh, we’ll get there.

Meanwhile, smart lenses hold huge immediate promise for people with low vision — glaucoma, macular degeneration, and so on. Mojo’s chips will be able to take in the scene before a person and in real time add edges to buildings, boost the contrast around signs and people, and help those with dim vision navigate the world around them. This could be a game changer — but it’s just the beginning.

Tomorrow: Is infrared vision in your future?

Vision is a complex dance between your hardware (meaning your retina, lens, the tiny rods and cones tucked away in there, and so on) and your brain, which interprets the electrical impulses sent from your eyes and translates them into images. Your brain accommodates for flaws in your hardware, to some extent. In the future, it may not have to.

Smart lenses could some day correct for an imperfect lens, or even replace it entirely, fixing those electrical impulses before your brain receives them for interpretation. Smart lenses could also interject different data before your lenses, giving you super-binocular vision, or infrared vision. Heck, researchers have already scienced up supermice with infrared vision. Why not you? The potential powers you could receive with a fresh pair of contacts is limitless, once the tech is perfected.

“We’re just on the very precipice of figuring out what some of those things are,” Sinclair told me. “The sky’s the limit on what we can do with the information and the platform we’ve built.”

“It’s like talking to Siri 15.0.”

Peer further down the road, into the post-smartphone era, and lenses like this could replace our very eyes. Futurist Gary Bengier, a former Silicon Valley technologist, writer, and philosopher, envisions a world 140 years from now, where displays aren’t just worn in contact lenses but are actually part of you, thanks to a chip inserted behind your ear and connected to a corneal implant. In his new book Unfettered Journey, he describes how artificial intelligence and mind-machine interfaces combine with retinal implants to essentially build Wikipedia directly into your body:

“He picked out the distinctive towers of an occasional fusion plant. Joe hadn’t taken a long flight since grad school. The scene below him awakened his scientific curiosity. He let the keyword search fill his head, opening the NEST corneal connection, and images and words filled the viewer occupying the corner of his eye.”

Wild stuff, right?

“You basically use this to connect to the cloud, the net, whatever. It’s like talking to Siri 15.0,” Bengier told me recently. In the not-too-distant future, he believes, smarter artificial intelligence will combine with data about where you are and sensors that pick up on your every whim. You’ll be able to simply think about pizza and you’ll see a little map on your cornea showing where you can buy one.

Now that’s a vision for vision.